Attribute calculations

We added the functionality to calculate new attributes with python code, which you can use to engineer new features for your data within refinery. We also added a new chart, which lets you inspect the Weak Supervision confidence distribution at a single glance. If you signed up for our managed version, then you can enjoy a freshly added role management system that lets you decide who gets to label what. For details on all of this, take a look at the full changelog - you won't regret it!

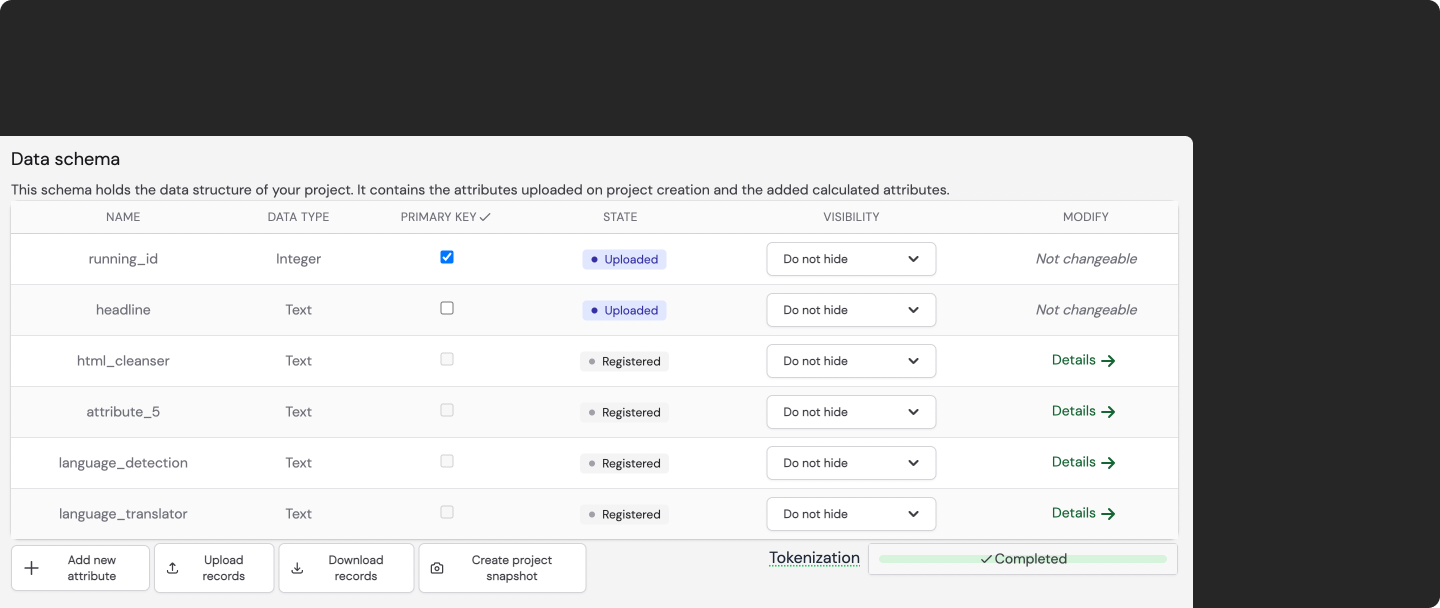

Calculate new attributes directly in refinery

This is an exciting one! The previous workflow in refinery required you to have your data ready at the start of your project. After that, the schema was set and you could not really change much. This was especially a problem when you realized that you are missing some features some days into the project.

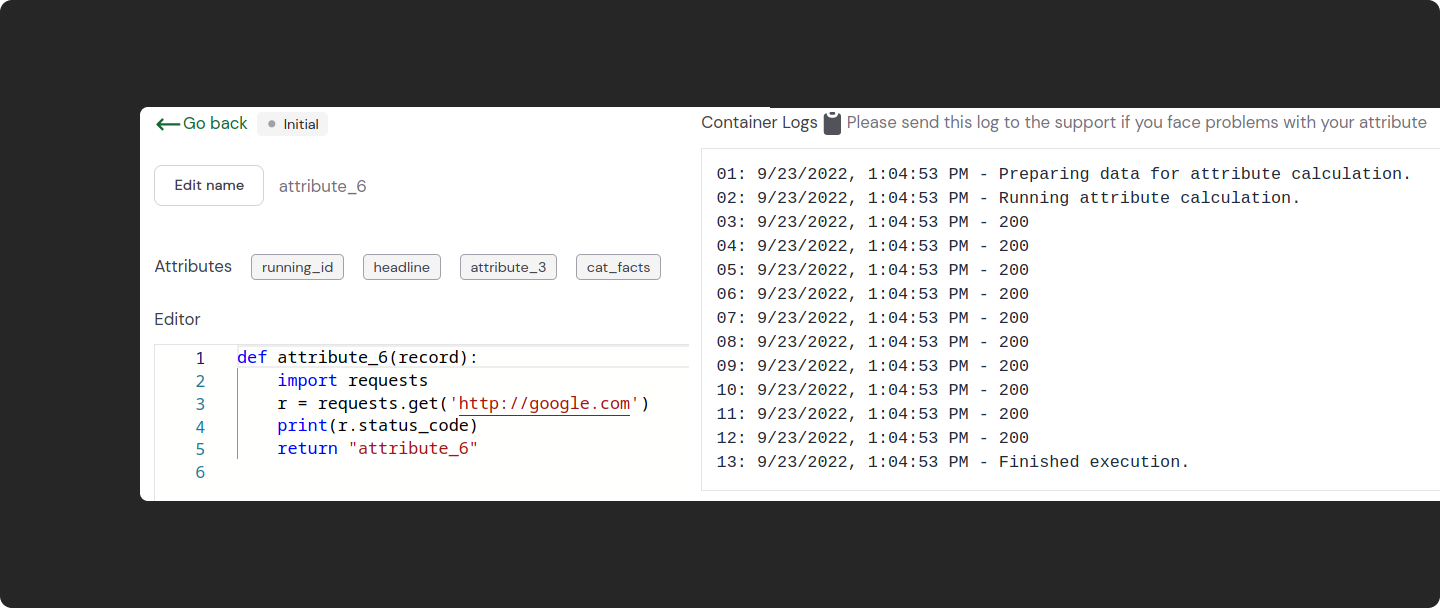

This changes today! You now have the freedom to create as many new attributes as you like programmatically all within your beloved refinery user interface. This is huge news as you can engineer features that are then usable in Labeling Functions, Active Learners, and in the advanced filtering of the Data Browser (just to name a few). The best thing: requests work (see Fig. 2). That means you can easily incorporate any API you want to create new attributes of your data!

To give you a starting point, here are some ideas that you could use this new feature for:

- Add a new attribute that combines all text attributes into a single one, create a new embedding on top of it and create a new Active Learner that can now learn on the whole record instead of just individual attributes

- Calculate the length of your text attributes and then look at your records in the Data Browser ordered by length to find potential errors in your data (e.g. empty attributes)

- Enrich your data with sentiment by querying a custom model behind an API so you can write Labeling Functions that need that kind of information

I think by now you get the idea, the possibilities are endless and finally you can do that all within refinery itself - no need to go into your notebook and re-upload all the data.

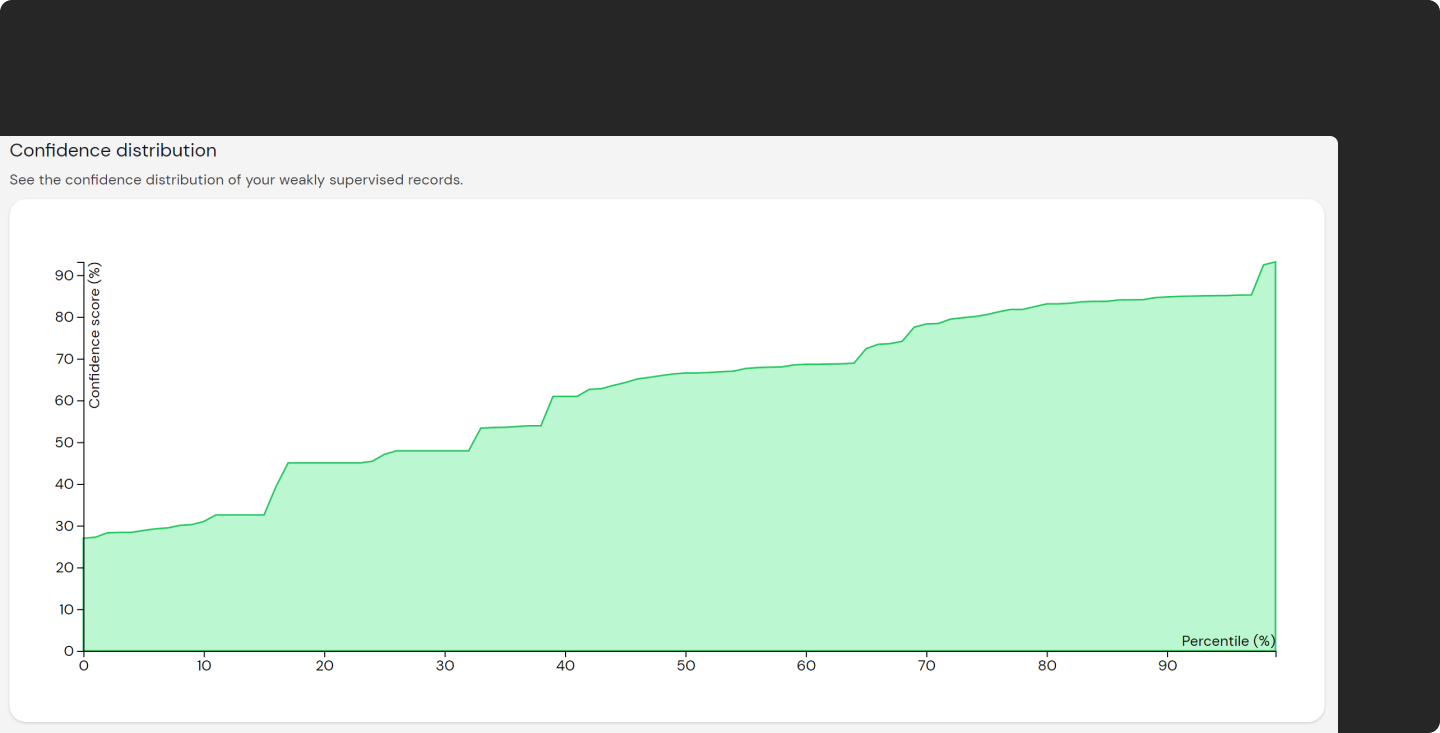

Confidence distribution chart

Weak supervision is the heart and soul behind semi-automated labeling in refinery. Previously, you could see the amount of records with a weak supervision label and you could filter and sort by confidence in the data browser. With this newest release, you will now have the possibility to inspect your weak supervision confidence distribution at a single glance so you can have better insight into the weak supervision quality before exporting your data.

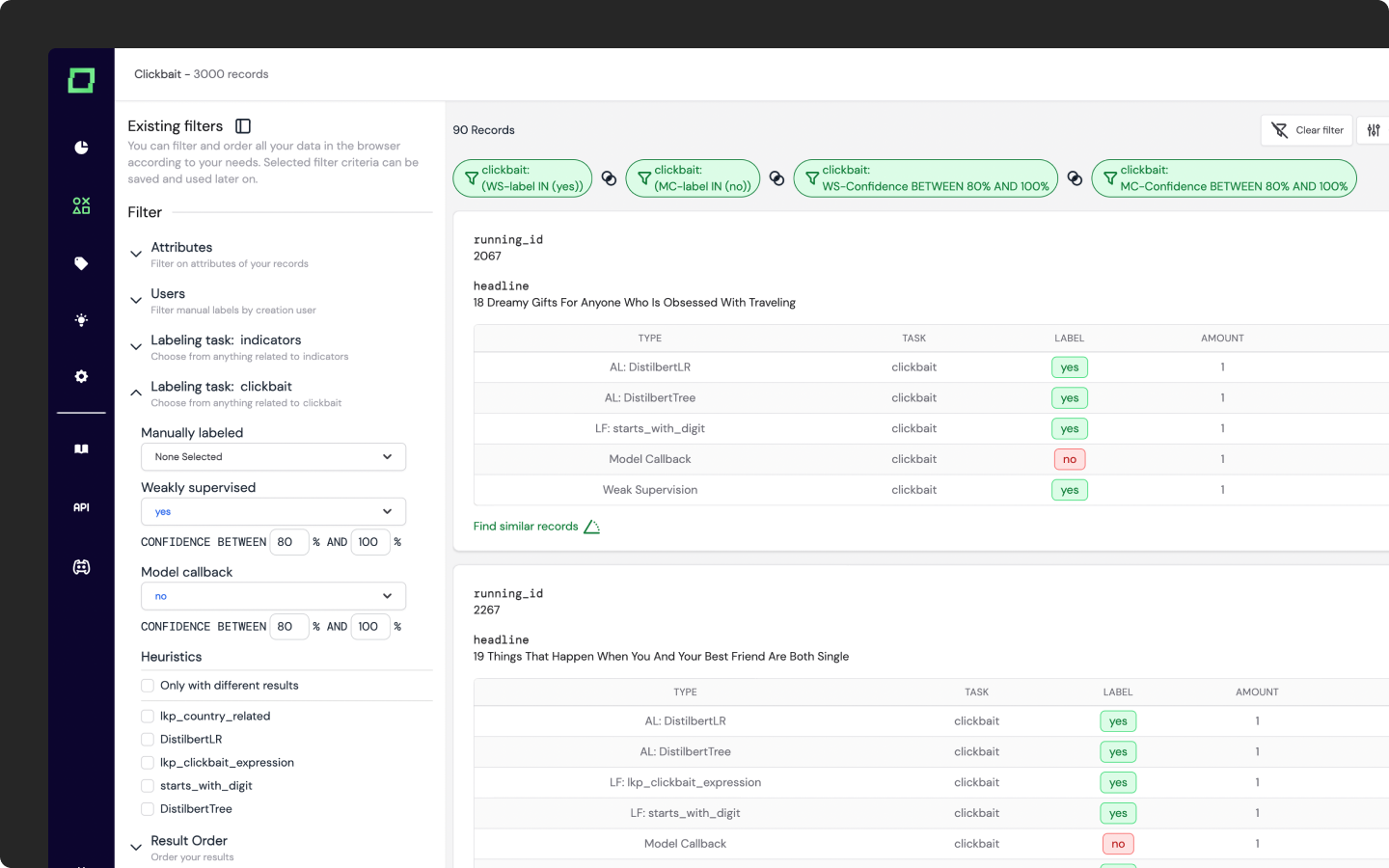

Add custom model predictions to your project

In this version, you will now be able to upload your custom model predictions to your refinery project using our SDK. We offer an easy workflow that includes downloading your data, training a classifier, and uploading the predictions to your project. We built several adapters for some of your favorite machine learning frameworks to make the process as easy as possible. To start, please take a closer look at the "Callbacks" section of the SDK readme!

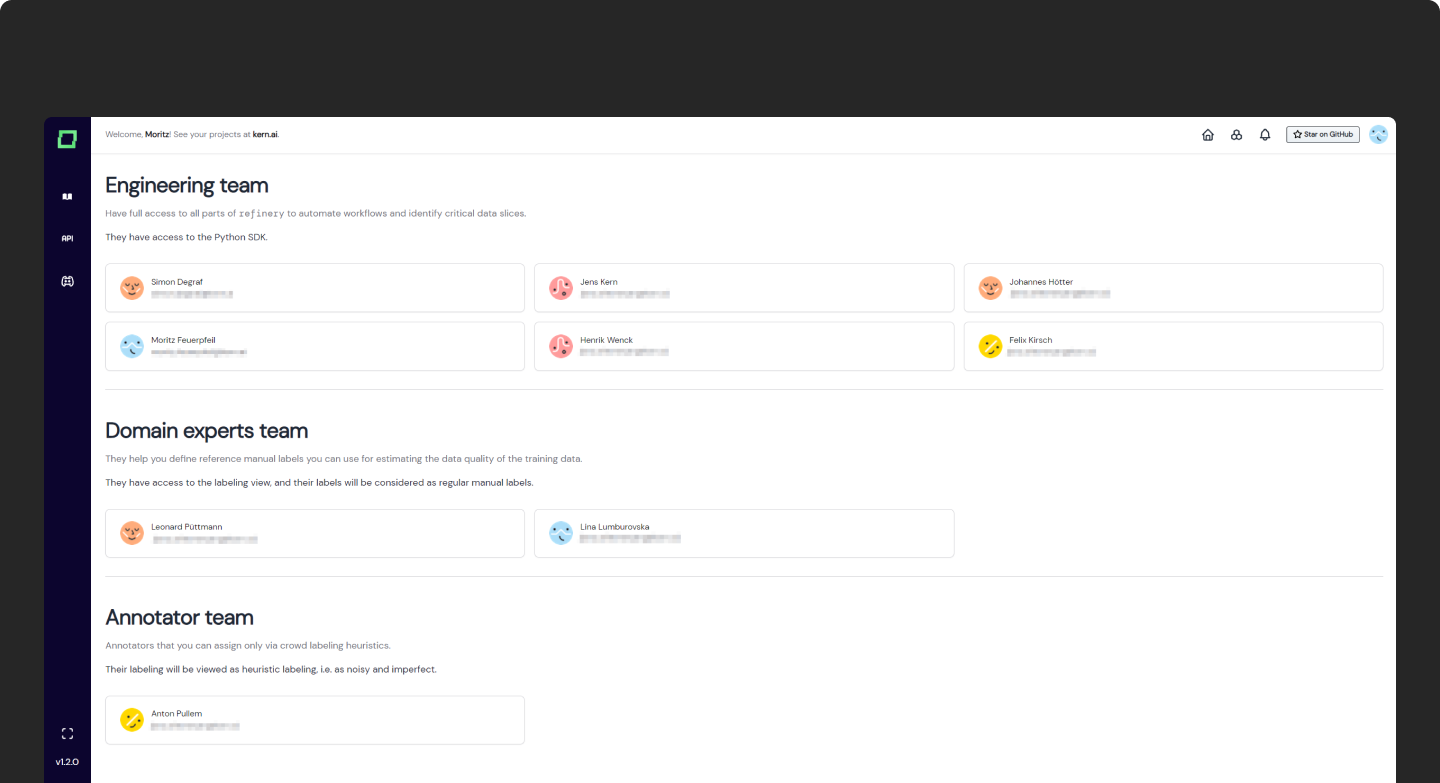

Role system

This has been in the works for a long time and it is finally here! We are so happy to introduce role management into our managed version. Currently available are three roles: Engineer, Domain expert, and Annotator.

Why is this just totally awesome? Because now you can dissect your data into important slices and distribute them to people for labeling. If you want to re-label the data that has low weak supervision confidence, you can just create a slice for it and send it over to your colleagues who have the domain knowledge to reliably label this data. It even allows for crowd labeling.

Read this section to find out the differences in the roles and how they could be applied to your project!

Engineer

The Engineer has full access to all parts of refinery. They see the regular refinery interface with the addition that they can now distribute labeling tasks to annotators.

Domain Expert

The Domain Expert has access only to the labeling view of their projects. Within that view, they can freely choose static slices they want to work on. Their labels are saved as regular labels.

This role should be given to someone you trust to label your data reliably, hence the name Domain Expert.

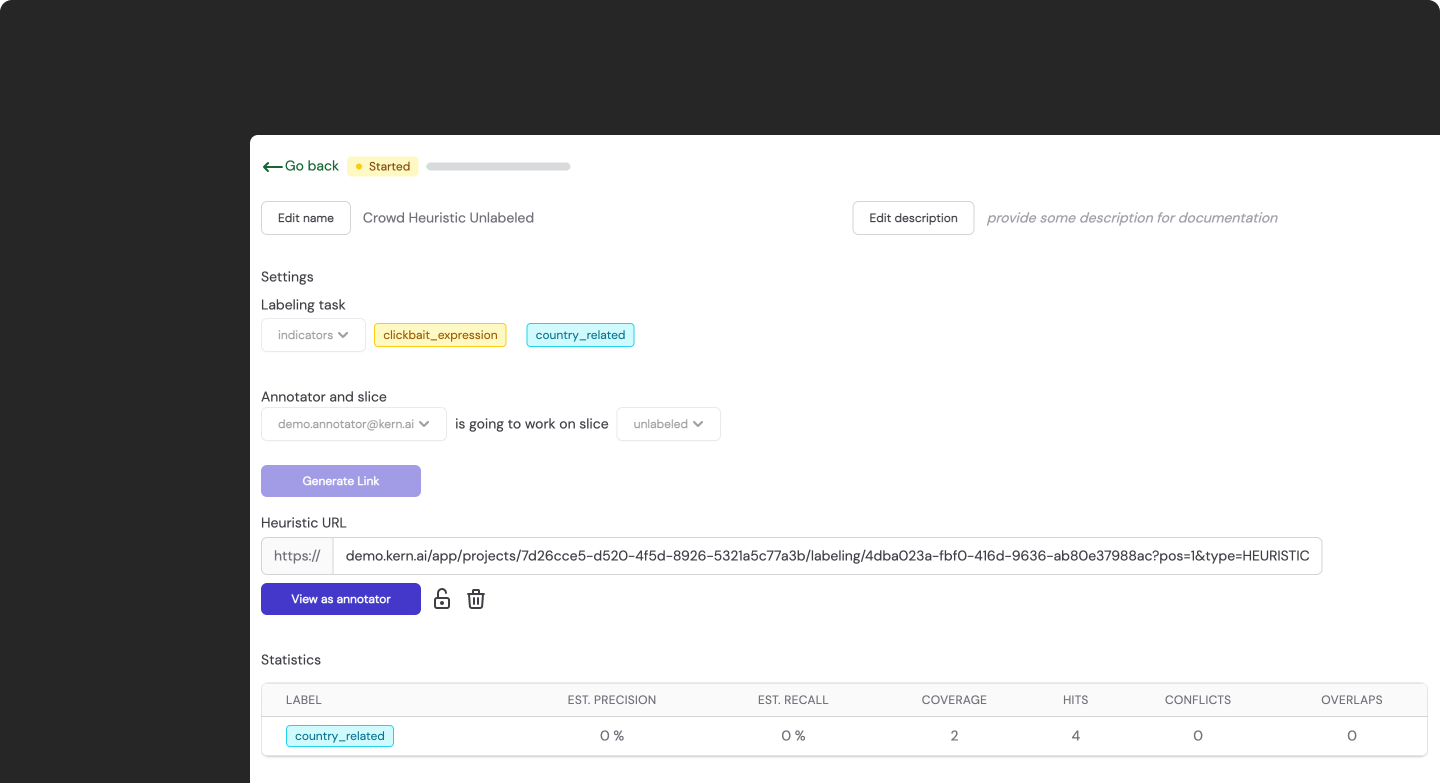

Annotator

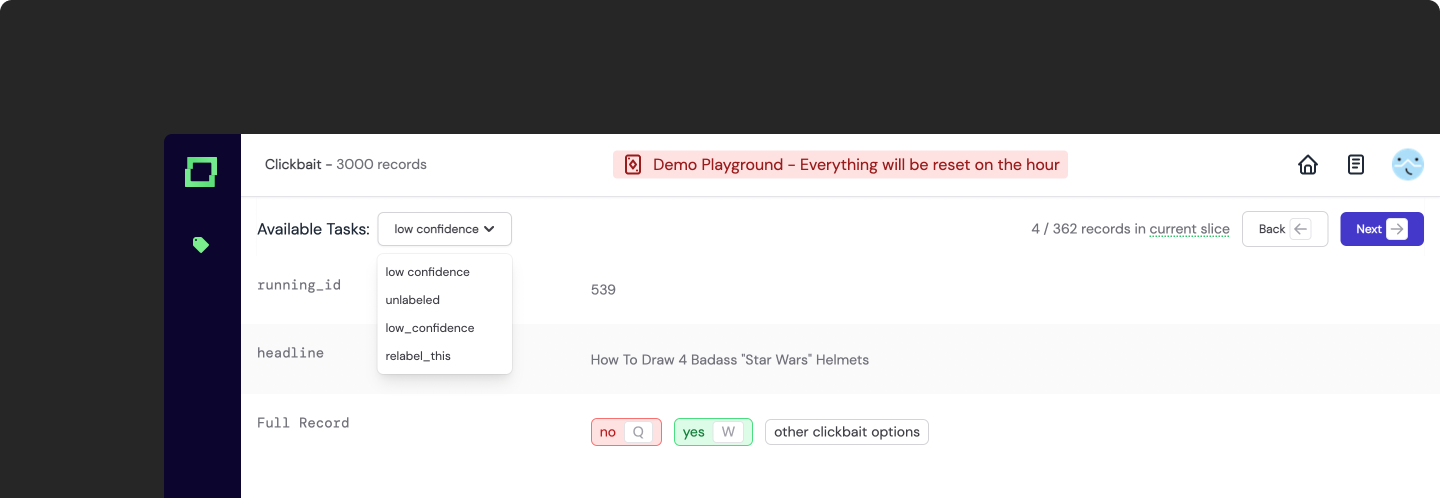

The Annotator also has access to only the labeling view of a project. There are important differences to the Domain Expert, though.

Firstly, the Annotators access the labeling view by following a link that the Engineer provides, which was generated in the Heuristics tab (see above description of "Engineer"). That link restricts the Annotator to a given slice with a given task that they can't decide on their own.

Secondly, the labels are not equal to the ones of the Domain Experts or Engineers. They are saved as values for the heuristic which was used to generate the link.

Annotators are not trusted to label the data reliably, hence these restrictions. They can be crowd-labelers or just people from a different domain who are expected to make more labeling mistakes.

Crowd labeling support

The new role system now allows for crowd labeling. You can choose exactly the data and the task that people should label and send it out using links. The only prerequisite is an active Kern account that is registered in your organization, which is currently done by us manually. So if you want to incorporate Annotators in your project, please contact us!

Minor changes

- fixed bugs in the ML execution environment

- you can now use the SDK to import more data into your project (JSON format)

- quick add: click on the weak supervision label to add it as a manual label